2025-02-18 equivariant lecture 3

[[lecture-data]]

2025-02-18

Readings

- a

n. Subject

Invariant functions on sets

Zaheer et al 2017

Goals:

- Define a class of functions that can deal with sets/multisets as inputs

- Translate this class of functions into an efficient neural network

- Show that it works well (state-of-the-art) for several real-world applications

Sets/multisets

+++ see notes

We can think of these as

example

Motivation

- sometimes the order is not important for the "downstream task"

- Applications in the paper

- learning to estimate pop stats (mean, variance)

- learning to sum digits from images

- point cloud classification

- outlier detection

- predicting a set of tags (for text)

prior implementations of invariant functions on these sets

Invariant polynomials

is poly that can be written as combo of elementary symmetric polynomials

Equivalently

+++++ see notes

This requires

Another way to describe them

Canonicalization

Given

A function

choose a "representative" or "template" of my tuple

Deep Sets Paper

Input

for suitable

- sum does not need to be a sum. Just needs to be a permutation invariant function applied to each element in the set

(can think of this as a layer in a neural network)

Wait whoa

- Can write an invariant function as a sum of just one feature per element

Previous polynomial representation, we needed

- will see in proof that perhaps we can get simpler representations by using a higher dimensional latent space (

)

Note

in 2nd lecture

- if

and is finite then (group averaging)

Here,

But Deep Seets tells us that we only need to sum over

Proof (sketch)

- use

to encode each possible input into a real number - injective over the whole set of sets

- learn

that executes that function on the set

Since

Let

such that

- this gives us a real number per orbit and then learning an invariant function is the same as learning the function in each orbit representative

There are also better theoretical proofs of the same thing Tabaghi and Wang 2024

- sets of

elements in

If this function is

and

architecture (++++++see notes)

point clouds

invariant functions on point clouds

motivation:

- emulation of cosmological simulations

- galaxy properties preditions from dark matter using only simultations

"regression" framework for these types of movements

++++ see notes

- invariant to translations

- rotations

- reflections

translations

- center point cloud (translation invariance w sorting getting permutation invariance - choosing a representative from our orbit)

permutations

Want

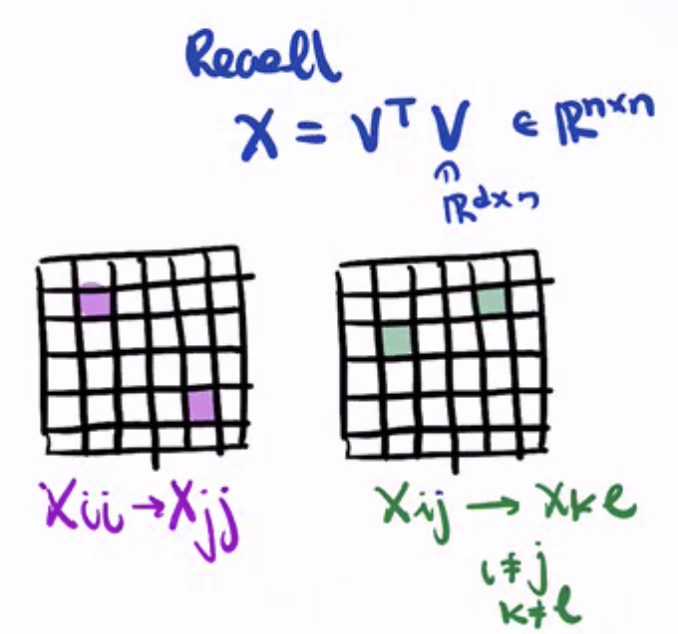

is invariant - where

is gram matrix

invariant by permutations acting by conjugations - symmetry of graphs that we saw in the first class

- Graph neural networks (cover GNNs in next lectures)

want functions that are invariant to permutations of rows and columns

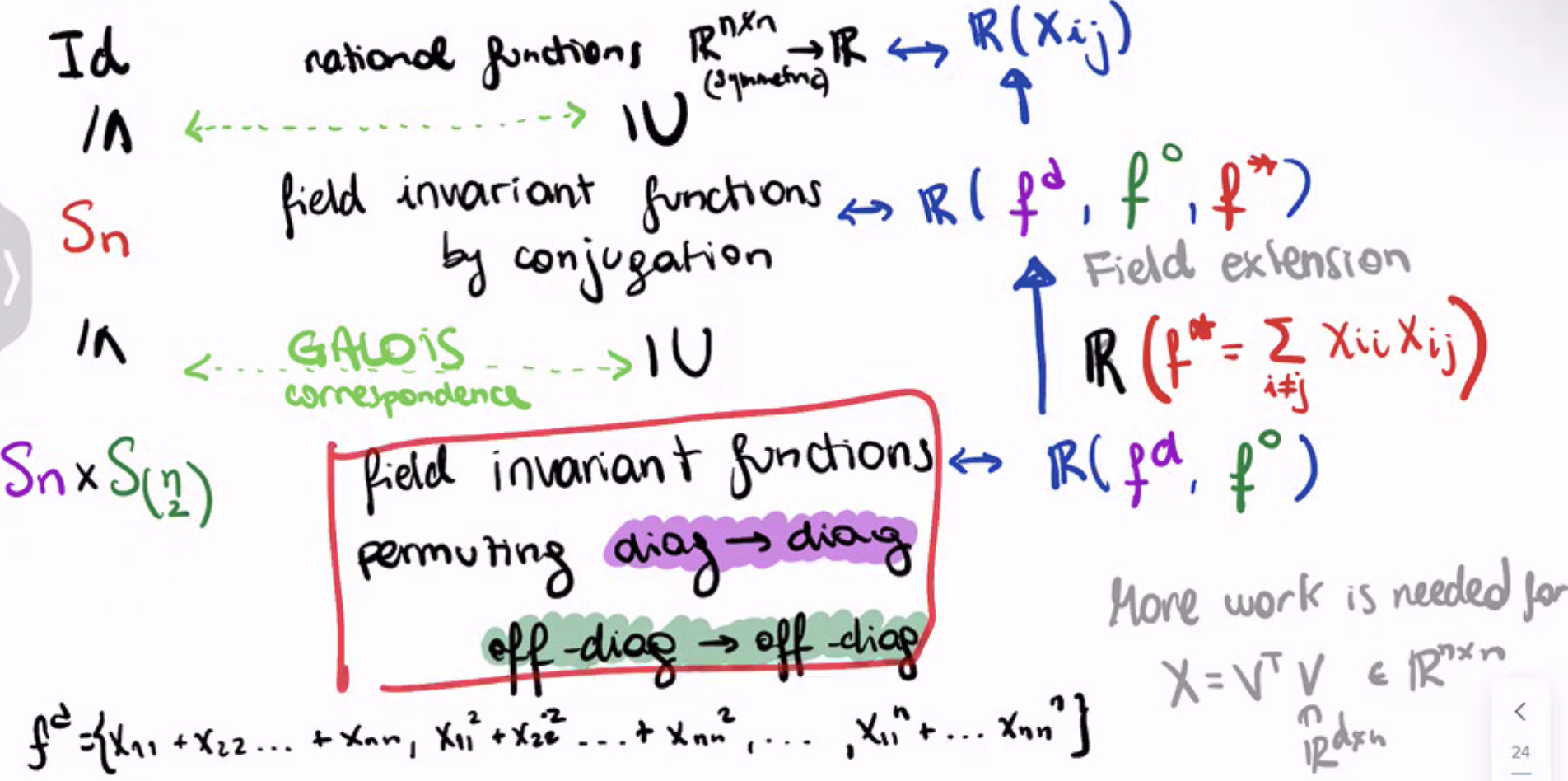

Why invariant theory?

- construct the graph with

complexity, we'll be able to learn invariant functions with complexity - ++++ see notes

- Galois theory - interesting mathematical properties

Galois Theory (setup)

- always sending diag to diag and off-diag to off-diag

- (permuting rows and columns at the same time)

the

++++see notes

Galois theory approach

- idea: if we construct a set of invariants that are only fixed by the desired group (ie no other bigger group fixes all the invariants) then those invariants generate the field of invariant functions

- (fundamental theorem rephrased)

Rosenlicht (1956) the orbits that the field generators fail to uniquely identiyfy (bad) has to satisfy that a finite set of equations (bad set is measure zero)

- field extension

"ties up" the permutation of diagonals and off diagonals - constrains the consistency of the permutation between diagonals and off diagonals

- this generates the field of invariant functions

- can construct a polynomial that satisfies this property

++see notes

that is the field generated by

And note that

- separating orbits under group actions is considered

(galois theory is not a necessary argument, uses easier argument - construct set of invariants that are only fixed by desired group, then can separate orbits almost everywhere)

using Deep Sets

universally approximate invariant functions on symmetric function sets outside an invariant, closed, zero-measure "bad set" where orbits are not separable

From

- consider

"centers" that is and S_n invariant - ex

-means centroids ordered by 2norm

- ex

Invariant machine learning model with

Theorem (Huang Blum-Smith 2024 etc)

- the machine learning model above can universally approximate invariant functions on symmetric function sets outside an invariant, closed, zero-measure "bad set" where orbits are not separable