node-level task

subject:: Data Science Methods for Large Scale Graphs

parent:: Graph Signals and Graph Signal Processing

theme:: math notes

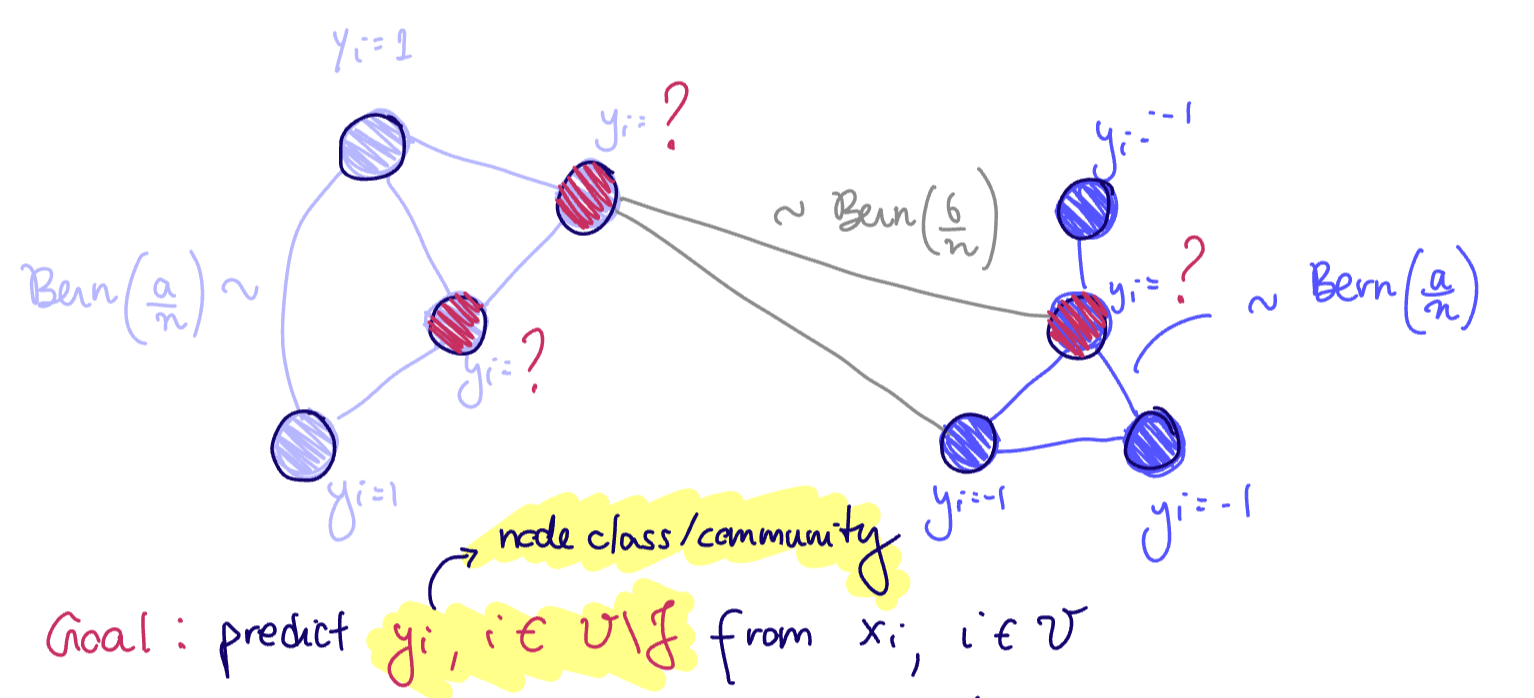

Node-level tasks (sometimes called inductive learning or semi-supervised learning) have graph

We assume we only observe

Consider the contextual SBM:

If

Goal: predict

hypothesis class: the graph convolutions

Problem: Define a mask

Application: infer node's class/community/identity locally ie without needing communication, determining clustering techniques, which require eigenvectors (global graph information)